Last time on Eukaryote Writes Blog: You learned about knitting history.

You thought you were done learning about knitting history? You fool. You buffoon. I wanted to double check some things in the last post and found out that the origins of knitting are even weirder than I guessed.

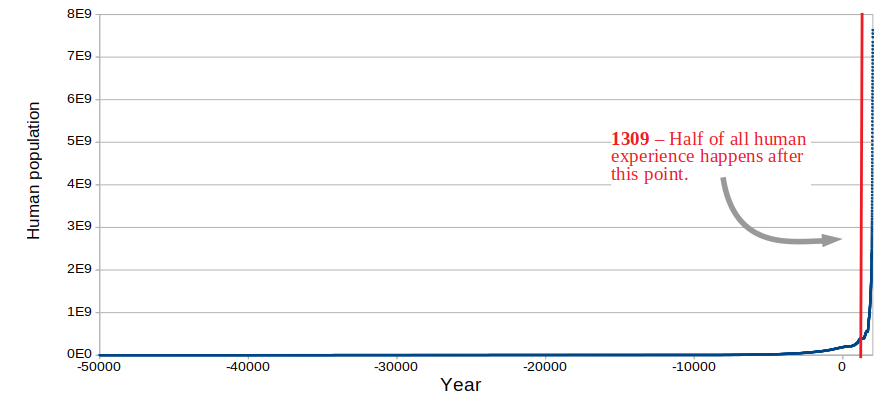

Humans have been wearing clothes to hide our sinful sinful bodies from each other for maybe about 20,000 years. To make clothes, you need cloth. One way to make cloth is animal skin or membrane, that is, leather. If you want to use it in any complicated or efficient way, you also need some way to sew that – very thin strips of leather, or taking sinew or plant fiber and spinning it into thread. Also popular since very early on is taking that thread, and turning it into cloth. There are a few ways to do this.

(Can you just sort of smush the fiber into cloth without making it into thread? Yes. This is called felting. How well it works depends on the material properties of the fiber. A lot of traditional Pacific Island cloth was felted from tree bark.)

Now with all of these, you could probably make some kind of cloth by taking threads and, by hand, shaping them into these different structures. But that sounds exhausting and nobody did that. Let’s get tools involved. These different structures correspond to some different kind of manufacturing technique.

By far, the most popular way of making cloth is weaving. Everyone has been weaving for tens of thousands of years. It’s not quite a cultural universal but it’s damn close. To weave, you need a loom.1 There are ten million kinds of loom. Most primitive looms can make a piece of cloth that is, at most, the size of the loom. So if you want to make a tunic that’s three feet wide and four feet long, you need cloth that’s at least three feet wide and four feet long, and thus, a loom that’s at least three feet wide and four feet long. You can see how weaving was often a stationary affair.

Recap

Here’s what I said in the last post: Knitting is interesting because the manufacturing process is pretty simple, needs simple tools, and is portable. The final result is also warm and stretchy, and can be made in various shapes (not just flat sheets). And yet, it was invented fairly recently in human history.

I mostly stand by what I said in the last post. But since then I’ve found some incredible resources, particularly the scholarly blogs Loopholes by Cary “stringbed” Karp and Nalbound by Anne Marie Deckerson, which have sent me down new rabbit-holes. The Egyptian knit socks I outlined in the last post sure do seem to be the first known knit garments, like, a piece of clothing that is meant to cover your body. They’re certainly the first known ones that take advantage of knitting’s unique properties: of being stretchy, of being manufacturable in arbitrary shapes. The earliest knitting is… weirder.

SCA websites

Quick sidenote – I got into knitting because, in grad school, I decided that in the interests of well-roundedness and my ocular health, I needed hobbies that didn’t involve reading research papers. (You can see how far I got with that). So I did two things: I started playing the autoharp, and I learned how to knit. Then, I was interested in the overlap between nerds and handicrafts, so a friend in the Society for Creative Anachronism pitched me on it and took me to a coronation. I was hooked. The SCA covers “the medieval period”; usually, 1000 CE through 1600 CE.

I first got into the history of knitting because I was checking if knitting counted as a medieval period art form. I was surprised to find that the answer was “yes, but barely.” As I kept looking, a lot of the really good literature and analysis – especially experimental archaeology – came out of blogs of people who were into it as a hobby, or perhaps as a lifestyle that had turned into a job like historical reenactment. This included a lot of people in the SCA, who had gone into these depths before and just wrote down what they found and published it for someone else to find. It’s a really lovely knowledge tradition to find one’s self a part of.

Aren’t you forgetting sprang?

There’s an ancient technique that gets some of the benefits of knitting, which I didn’t get to in the last post. It’s called sprang. Mechanically, it’s kind of like braiding. Like weaving, sprang requires a loom (the size of the cloth it produces) and makes a flat sheet. Like knitting, however, it’s stretchy.

Sprang shows up in lots of places – the oldest in 1400 BCE in Denmark, but also other places in Europe, plus (before colonization!): Egypt, the Middle East, centrals Asia, India, Peru, Wisconsin, and the North American Southwest. Here’s a video where re-enactor Sally Pointer makes a sprang hairnet with iron-age materials.

Despite being widespread, it was never a common way to make cloth – everyone was already weaving. The question of the hour is: Was it used to make socks?

Well, there were probably sprang leggings. Dagmar Drinkler has made historically-inspired sprang leggings, which demonstrate that sprang colorwork creates some of the intricate designs we see painted on Greek statues – like this 480 BCE Persian archer.

I haven’t found any attestations of historical sprang socks. The Sprang Lady has made some, but they’re either tube socks or have separately knitted soles.

Why weren’t there sprang socks? Why didn’t sprang, widespread as it is, take on the niche that knitting took?

I think there are two reasons. One, remember that a sock is a shaped-garment, tube-like, usually with a bend at the heel, and that like weaving, sprang makes a flat sheet. If you want another shape, you have to sew it in. It’s going to lose some stretch where it’s sewn at the seam. It’s just more steps and skills than knitting a sock.

The second reason is warmth. I’ve never done sprang myself – from what I can tell, it has more of a net-like openness upon manufacture, unlike knitting which comes with some depth to it. Even weaving can easily be made pretty dense simply by putting the threads close together. I think, overall, a sprang fabric garment made with primitive materials is going to be less warm than a knit garment made with primitive materials.

Those are my guesses. I bring it up merely to note that there was another thread → cloth technique that made stretchy things that didn’t catch on the same way knitting did. If you’re interested in sprang, I cannot recommend The Sprang Lady’s work highly enough.

Anyway, let’s get back to knitting.

Knitting looms

The whole thing about roman dodecahedrons being (hypothetically) used to knit glove fingers, described in the last post? I don’t think that was actually the intended purpose, for the reasons I described re: knitting wasn’t invented yet. But I will cop to the best argument in its favor, which is that you can knit with glove fingers with a roman dodecahedron.

“But how?” say those of you not deeply familiar with various fiber arts. “That’s not needles,” you say.

You got me there. This is a variant of a knitting loom. A knitting loom is a hoop with pegs to make knit tubes. This can be the basis of a knitting machine, but you can also knit on one on its own.. They make more consistent knit tubes with less required hand-eye coordination. (You can also make flat panels with them, especially a version called a knitting rake, but since all of the early knitting we’re talking about are tubes anyhow, let’s ignore that for the time being.)

Knitting on a loom is also called spool knitting (because you can use a spool with nails in it as the loom for knitting a cord) and tomboy knitting (…okay). Structurally, I think this is also basically the same thing as lucet cord-making, so let’s go ahead and throw that in with this family of techniques. (The earliest lucets are from ~1000 CE Viking Sweden and perhaps medieval Viking Britain.)

The important thing to note is that loom knitting makes a result that is, structurally, knit. It’s difficult to tell whether a given piece is knit with a loom or needles, if you didn’t see it being made. But since it’s a different technique, different aspects become easier or harder.

A knitting loom sounds complicated but isn’t hard to make, is the thing. Once you have nails, you can make one easily by putting them in a wood ring. You could probably carve one from wood with primitive tools. Or forge one. So we have the question: Did knitting needles or knitting looms come first?

We actually have no idea. There aren’t objects that are really clearly knitting needles OR knitting looms until long after the earliest pieces of knitting. This strikes me as a little odd, since wood and especially metal should preserve better than fabric, but it’s what we’ve got. It’s probably not helped by the fact that knitting needles are basically just smooth straight sticks, and it’s hard to say that any smooth straight stick is conclusively a knitting needle (unless you find it with half a sock still on it.)

(At least one author, Isela Phelps, speculates that finger-knitting, which uses the fingers of one hand like a knitting loom and makes a chunky knit ribbon, came first – presumably because, well, it’s easier to start from no tools than to start from a specialized tool. This is possible, although the earliest knit objects are too fine and have too many stitches to have been finger-knit. The creators must have used tools.)

(stringbed also points out that a piece of whale baleen can be used as circular knitting needles, and that the relevant cultures did have access to and trade in whale parts. Although while we have no particular evidence that they were used as such, it does mean that humanity wouldn’t have to invent plastic before inventing the circular knitting needle, we could have had that since the prehistoric period. So, I don’t know, maybe it was whales.)

THE first knitting

The earliest knit objects we have… ugh. It’s not the Egyptian socks. It’s this.

There are a pair of long, thin, colorful knit tubes, about an inch wide, a few feet long. They’re pretty similar to each other. Due to the problems inherent in time passing and the flow of knowledge, we know one of them is probably from Egypt, and was carbon-dated to 425-594 CE. The other quite similar tube, of a similar age, has not been carbon dated but is definitely from Egypt. (The original source text for this second artifact is in German, so I didn’t bother trying to find it, and instead refer to stringbed’s analysis. See also matthewpius guestblogging on Loopholes.) So between the two of them, we have a strong guess that these knit tubes were manufactured in Egypt around 425-594 CE, about 500 years before socks.

People think it was used as a belt.

This is wild to me. Knitting is stretchy, and I did make fun of those peasants in 1300 CE for not having elastic waistlines, so I could see a knitted belt being more comfortable than other kinds of belts.2 But not a lot better. A narrow knit belt isn’t going to be distribute most of the force onto the body too differently than a regular non-stretchy belt, and regular non-stretchy belts were already in great supply – woven, rope, leather, etc. Someone invented a whole new means of cloth manufacture and used it to make a thing that existed slightly differently.

Then, as far as I can tell, there are no knit objects in the known historical record for five hundred years until the Egyptian socks pop up.

Pulling objects out of the past is hard. Especially things made from cloth or animal fibers, which rot (as compared to metal, pottery, rocks, bones, which last so long that in the absence of other evidence, we name ancient cultures based on them.) But every now and then, we can. We’ve found older bodies and textiles preserved in ice and bogs and swamps.3 We have evidence of weaving looms and sewing needles and pictures of people spinning or weaving cloth and descriptions of them doing it, from before and after. I’m guessing that the technology just took a very long time to diversify beyond belts.

Speaking of which: how was the belt made? As mentioned, we don’t find anything until much later that is conclusively a knitting needle or a knitting loom. The belts are also, according to matthewpius on loopholes, made with a structure called double knitting. The effect is (as indicated by Pallia – another historic reenactor blog!) kind of hard to do with knitting needles in the way they achieved it, but pretty simple to do with a knitting loom.

(Another Egyptian knit tube belt from an unclear number of centuries later.)

Viking knitting

You think this is bad? Remember before how I said knitting was a way of manufacturing cloth, but that it was also definable as a specific structure of a thread, that could be made with different methods?

The oldest knit object in Europe might be a cup.

You gotta flip it over.

Enhance.

That’s right, this decoration on the bottom of the Ardagh Chalice is knit from wire.

Another example is the decoration on the side of the Derrynaflen Paten, a plate made in 700 or 800 CE in Ireland. All the examples seem to be from churches, hidden by or from Vikings. Over the next few hundred years, there are some other objects in this technique. They’re tubes knitted from silver wire. “Wait, can you knit with wire?” Yes. Stringbed points out that knitting wire with needles or a knitting loom would be tough on the valuable silver wire – they could break or distort it.

What would make sense to do it with is a little hook, like a crochet hook. But that would only work on wire – yarn doesn’t have the structural integrity to be knit with just a hook, you need to support each of the active loops.

So was the knit structure just invented separately by Viking silversmiths, before it spread to anyone else? I think it might have been. It’s just such a long time before we see knit cloth, and we have this other plausible story for how the cloth got there.

(I wondered if there was a connection between the Viking knitting and their sources of silver. Vikings did get their silver from the Islamic world, but as far as I can tell, mostly from Iran, which is pretty far from Egypt and doesn’t have an ancient knitting history – so I can’t find any connection there.)

The Egyptian socks

Let’s go back to those first knit garments (that aren’t belts), the Egyptian knit blue-and-white socks. There are maybe a few dozen of these, now found in museums around the world. They seem to have been pulled out of Egypt (people think Kustat) by various European/American collectors. People think that they were made around 1000-1300 AD. The socks are quite similar: knit, made of cotton, in white and 1-3 shades of indigo, geometric designs sometimes including Kufic characters.

I can’t find a specific origin location (than “probably Egypt, maybe Kustat?”) for any of them. The possible first sock mentioned in the last post is one of these – I don’t know if there are any particular reasons for thinking that sock is older than the others.

This one doesn’t seem to be knit OR naalbound. Anne Marie Decker at Nalbound.com thinks it’s crocheted and that the date is just completely wrong. To me, at least, this cast doubts on all the other dates of similar-looking socks.

That anomalous sock scared me. What if none of them had been carbon-dated? Oh my god, they’re probably all scams and knitting was invented in 1400 and I’m wrong about everything. But I was told in a historical knitting facebook group that at least one had been dated. I found the article, and a friend from a minecraft discord helped me out with an interlibrary loan. I was able to locate the publication where Antoine de Moor, Chris Verhecken-Lammens and Mark Van Strydonck did in fact carbon-date four ancient blue-and-white knit cotton socks and found that they dated back to approximately 1100 CE – with a 95% chance that they were made somewhere between 1062 and 1149 CE. Success!

Helpful research tip: for the few times when the SCA websites fail you, try your facebook groups and your minecraft discords.

Estonian mitten

Also, here’s a knit fragment of a mitten found in Estonia. (I don’t have the expertise or the mitten to determine it myself, but Anneke Lyffland (another SCA name), a scholar who studied one is aware of cross-knit-looped naalbinding – like the Peruvian knit-lookalikes mentioned in the last post – and doesn’t believe this was naalbound.) It was part of a burial that was dated from 1238 – 1299 CE. This is fascinating and does suggest a culture of knitted practical objects, in Eastern Europe, in this time period. This is the earliest East European non-sock knit fabric garment that I’m aware of.

But as far as I know, this is just the one mitten. I don’t know much about archaeology in the area and era, and can’t speculate as to whether this is evidence that knitting was rare or whether we have very few wool textiles from the area and it’s not that surprising. (The voice of shoulder-Thomas-Bayes says: Lots of things are evidence! Okay, I can’t speculate as to whether it’s strong evidence, are you happy, Reverend Bayes?) Then again, a bunch of speculation in this post is also based on two maybe-belts, so, oh well. Take this with salt.

By the way, remember when I said crochet was super-duper modern, like invented in the 1700s?

Literally a few days ago, who but the dream team of Cary “stringbed” Karp and Anne Marie Decker published an article in Archaeological Textiles Review identifying several ancient probably-Egyptian socks thought to be naalbound as being actually crocheted.

This comes down to the thing about fabric structures versus techniques. There’s a structure called slip stitch that can be either crocheted or naalbound. So since we know naalbinding is that old, so if you’re looking at an old garment and see slip stitch, maybe you say it was naalbound. But basically no fabric garment is just continuous structure all the way through. How do the edges work? How did it start and stop? Are there any pieces worked differently, like the turning of a heel or a cuff or a border? Those parts might be more clearly worked with crochet hook than a naalbinding needle. And indeed, that’s what Karp and Decker found. This might mean that those pieces are forgeries – no carbon dating. But it might mean crochet is much much older than previously thought.

My hypothesis

Knitting was invented sometime around or perhaps before 600 CE in Egypt.

From Egypt, it spreads to other Muslim regions.

It spread into Europe via one or more of these:

- Ordinary cultural diffusion northwards

- Islamic influence in the Iberian Peninsula

- In 711 CE, Al-Andalus was conquered by the Umayyad Caliphate…

- Kicking off a lot of Islamic presence in and control over the area up until 1400 CE or so…

- …mostly out of the Arabic Maghreb

- Kicking off a lot of Islamic presence in and control over the area up until 1400 CE or so…

- In 711 CE, Al-Andalus was conquered by the Umayyad Caliphate…

- Meanwhile, starting in 1095 CE, the Latin Church called for armies to take Jerusalem from the Byzantines, kicking off the Crusades.

- …Peppering Arabic influences into Europe, particularly France, over the next couple centuries.

… Also, the Vikings were there. They separately invented the knitting structure in wire, but never got around to trying it out in cloth, perhaps because the required technique was different.

Another possibility

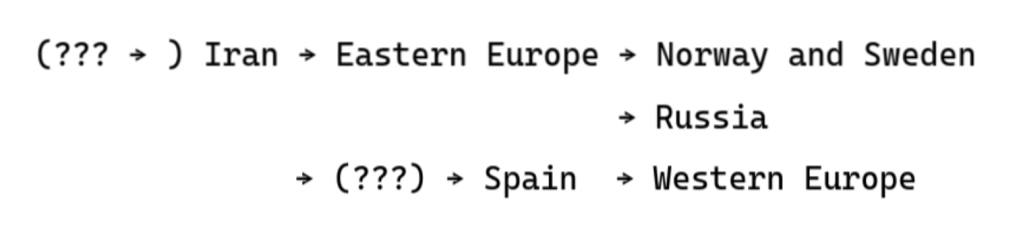

Wrynne, AKA Baronness Rhiall of Wystandesdon (what did I say about SCA websites?), a woman who knows a thing or two about socks, believes that based on these plus the design of other historical knit socks, the route goes something like:

I don’t know enough about socks to have an sophisticated opinion on her evidence, but the reasoning seems solid to me. For instance, as she explains, old Western European socks are knit from the cuff of the sock down, whereas old Middle Eastern and East European socks are knit from the toe of the sock up – which is also how Eastern and Northern European naalbound socks were shaped. Baronness Rhiall thinks Western Europe invented its sockmaking techniques independently based only having had a little experience with a few late 1200s/1300s knit pieces from Moorish artisans.

What about tools?

Here’s my best guess: The Egyptian tubes were made on knitting looms.

The viking tubes were invented separately, made with a metal hook as stringbed speculates, and never had any particular connection to knitting yarn.

At some point, in the Middle East, someone figured out knitting needles. The Egyptian socks and Estonian mitten and most other things were knit in the round on double-ended needles.

I don’t like this as an explanation, mostly because of how it posits 3 separate tools involved in the earliest knit structures – that seems overly complicated. But it’s what I’ve got.

Knitting in the tracks of naalbinding

I don’t know if this is anything, but here are some places we also find lots of naalbinding, beginning from well before the medieval period: Egypt. Oman. The UAE. Syria. Israel. Denmark. Norway. Sweden. Sort of the same path that we predict knitting traveled in.

I don’t know what I’m looking at here.

- Maybe this isn’t real and this places just happen to preserve textiles better

- Longstanding trade or migration routes between North Africa, the Middle East, and Eastern Europe?

- Culture of innovation in fiber?

- Maybe fiber is more abundant in these areas, and thus there was more affordance for experimenting. (See below.)

It might be a coincidence. But it’s an odd coincidence, if so.

Why did it take so long for someone to invent knitting?

This is the question I set out to answer in the initial post, but then it turned into a whole thing and I don’t think I ever actually answered my question. Very, very speculatively: I think knitting is just so complicated that it took thousands of years, and an environment rich in fiber innovation, for someone to invent and make use of the series of steps that is knitting.

Take this next argument with a saltshaker, but: my intuitions back this up. I have a good visual imagination. I can sort of “get” how a slip knot works. I get sewing. I understand weaving, I can boil it down in my mind to its constituents.

There are birds that do a form of sewing and a form of weaving. I don’t want to imply that if an animal can figure it out, it’s clearly obvious – I imagine I’d have a lot of trouble walking if I were thrown into the body of a centipede, and chimpanzees can drastically outperform humans on certain cognitive tasks – but I think, again, it’s evidence that it’s a simpler task in some sense.

Same with sprang. It’s not a process I’m familiar with, but watching Sally Pointer do it on a very primitive loom, I can see understand it and could probably do it now. Naalbinding – well, it’s knots, and given a needle and knowing how to make a knot, I think it’s pretty straightforward to tie a bunch of knots on top of each other to make fabric out of it.

But I’ve been knitting for quite a while now and have finished many projects, and I still can’t say I totally get how knitting works. I know there’s a series of interconnected loops, but how exactly they don’t fall apart? How the starting string turns into the final project? It’s not in my head. I only know the steps.

I think that if you erased my memory and handed me some simple tools, especially a loom, I could figure out how to make cloth by weaving. I think there’s also a good chance I could figure out sprang, and naalbinding. But I think that if you handed me knitting needles and string – even if you told me I was trying to get fabric made from a bunch of loops that are looped into each other – I’m not sure I would get to knitting.

(I do feel like I might have a shot at figuring out crochet, though, which is supposedly younger than any of these anyway, so maybe this whole line of thinking means nothing.)

Idle hands as the mother of invention?

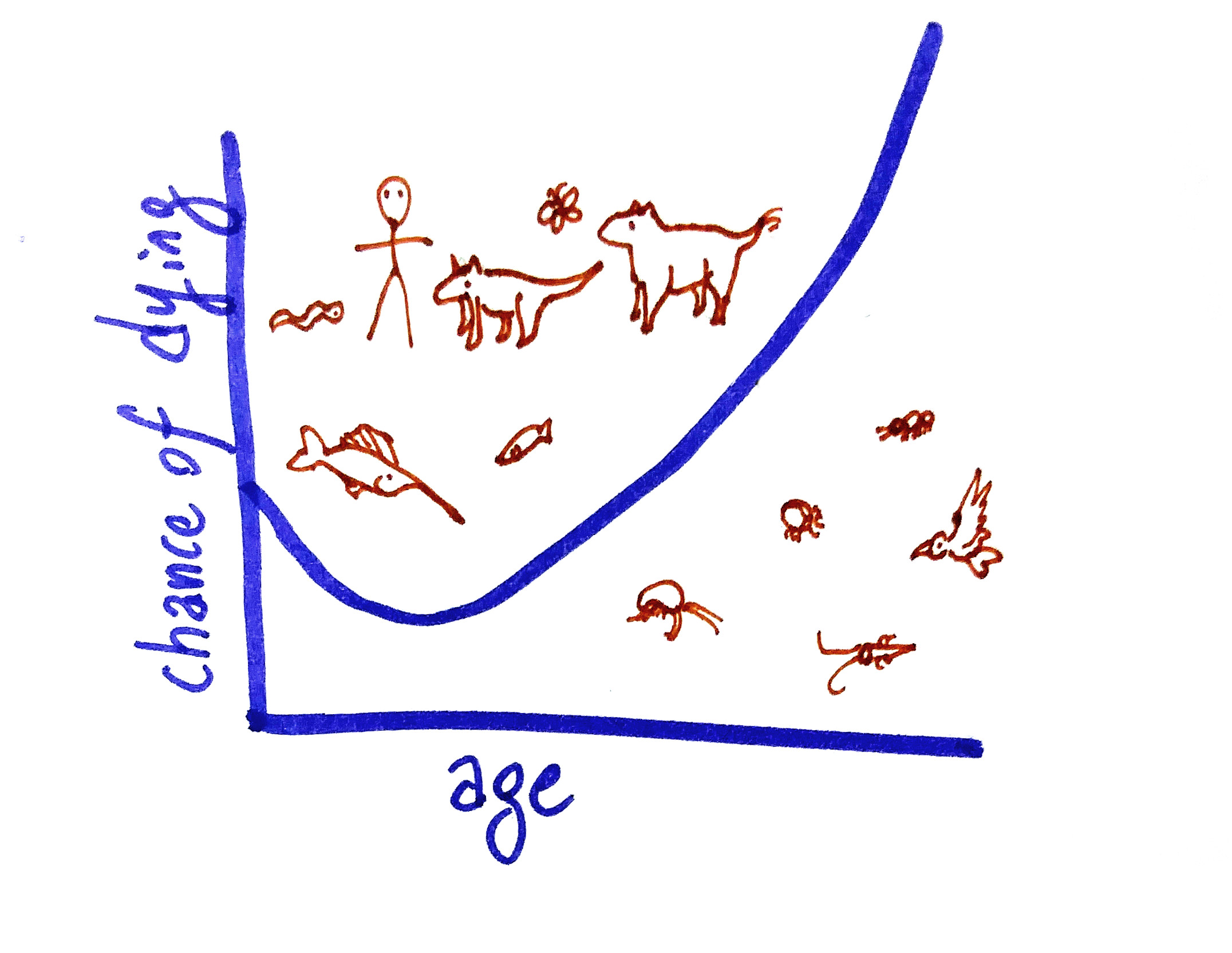

Why do we innovate? Is necessity the mother of invention?

This whole story suggests not – or at least, that’s not the whole story. We have the first knit structures in belts (already existed in other forms) and decorative silver wire (strictly ornamental.) We have knit socks from Egypt, not a place known for demanding warm foot protection. What gives?

Elizabeth Wayland Barber says this isn’t just knitting – she points to the spinning jenny and the power loom, both innovations in yarn production in general, that were invented recently by men despite thousands of previous years of women producing yarn. In Women’s Work: The First 20,000 Years, she writes:

“Women of all but the top social and economic classes were so busy just trying to get through what had to be done each day that they didn’t have excess time or materials to experiment with new ways of doing things.”

This speculates a kind of different mechanism of invention – sure, you need a reason to come up with or at least follow up on a discovery, but you also need the space to play. 90% of everything is crap, you need to be really sure that you can throw away (or unravel, or afford the time to re-make) 900 crappy garments before you hit upon the sock.

Bill Bryson, in the introduction to his book At Home, writes about the phenomenon of clergy in the UK in 1700s and 1800s. To become an ordained minister, one needed a university degree, but not in any particular subject, and little ecclesiastical training. Duties were light; most ministers read a sermon out of a prepared book once a week and that was about it. They were paid in tithes from local landowners. Bryson writes:

“Though no one intended it, the effect was to create a class of well-educated, wealthy people who had immense amounts of time on their hands. In conesquence many of them began, quite spontaneously, to do remarkable things. Never in history have a group of people engaged in a broader range of creditable activities for which they were not in any sense actually employed.”

He describes some of the great amount of intellectual work that came out of this class, including not only the aforementioned power loom, but also: scientific descriptions of dinosaurs, the first Icelandic dictionary, Jack Russel terriers, submarines aerial photography, the study of archaeology, Malthusian traps, the telescope that discovered Uranus, werewolf novels, and – courtesy of the original Thomas Bayes – Bayes’ theorem.

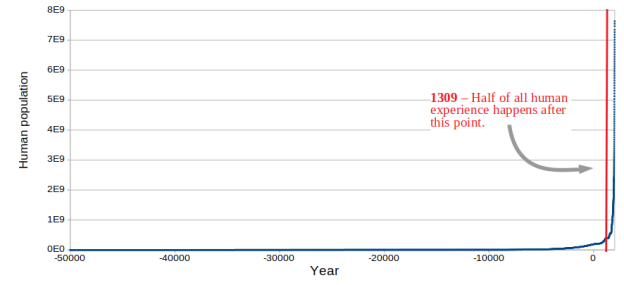

I offhandedly posited a random per-person effect in the previous post – each individual has a chance of inventing knitting, so eventually someone will figure it out. There’s no way this can be the whole story. A person in a culture that doesn’t make clothes mostly out of thread, like the traditional Inuit (thread is used to sew clothes, but the clothes are very often sewn out of animal skin rather than woven fabric) seems really unlikely to invent knitting. They wouldn’t have lots of thread about to mess around with. So you need the people to have a degree of familiarity with the materials. You need some spare resources. Some kind of cultural lenience for doing something nonstandard.

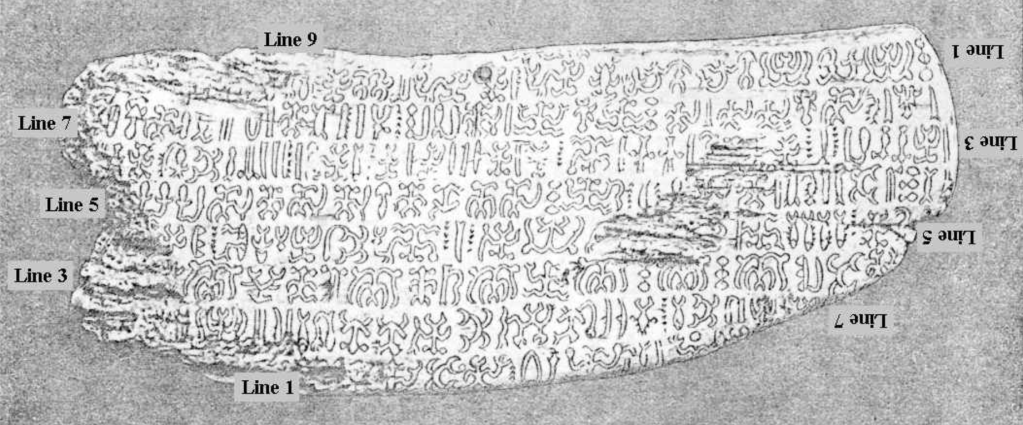

…But is that the whole story? The Incan Empire was enormous, with 12,000,000 citizens at its height. They didn’t have a written language. They had the quipu system for recording numbers with knotted string, but they didn’t have a written language. (Their neighbors, the Mayans, did.) Easter Island, between its colonization by humans in 1000 CE and its worse colonization by Europeans in 1700 CE, had a maximum population of maybe 12,000. It’s one of the most remote islands in the world. In isolation from other societies, they did develop a written language, in fact Polynesia’s only native written language.

I don’t know what to do with that.

Still. My rough model is:

![A businessy chart labelled "Will a specific group make a specific innovation?" There are three groups of factors feeding into each other. First is Person Factors, with a picture of a person in a power wheelchair: Consists of [number of people] times [degree of familiarity with art]. Spare resources (material, time). And cultural support for innovation. Second is Discovery Factors, with a picture of a microscope: Consists of how hard the idea "is to have", benefits from discovery, and [technology required] - [existing technology]. ("Existing technology" in blue because that's technically a person factor.) Third is Special Sauce, with a picture of a wizard. Consists of: Survivorship Bias and The Easter Island Factor (???)](https://eukaryotewritesblog.files.wordpress.com/2023/02/innovation.png?w=1024)

The concept of this chart amused me way too much not to put it in here. Sorry.

(“Survivorship bias” meaning: I think it’s safe to say that if your culture never developed (or lost) the art of sewing, the culture might well have died off. Manipulating thread and cloth is just so useful! Same with hunting, or fishing for a small island culture, etc.)

…What do you mean Loopholes has articles about the history of the autoharp?! My Renaissance man aspirations! Help!

Delightful: A collection of 1900’s forgeries of the Paracas textile. They’re crocheted rather than naalbound.

1 (Uh, usually. You can finger weave with just a stick or two to anchor some yarn to but it wasn’t widespread, possibly because it’s hard to make the cloth very wide.)

2 I had this whole thing ready to go about how a knit belt was ridiculous because a knit tube isn’t actually very stretchy “vertically” (or “warpwise”), and most of its stretch is “horizontal” (or “weftwise”). But then I grabbed a knit tube (fingerless glove) in my environment and measured it at rest and stretched, and it stretched about as far both ways. So I’m forced to consider that a knit belt might be reasonable thing to make for its stretchiness. Empiricism: try it yourself!

3 Fun fact: Plant-based fibers (cotton, linen, etc) are mostly made of carbohydrates. Animal-based fibers (silk, wool, alpaca, etc) and leather are mostly made of protein. Fens are wetlands that are alkaline and bogs are acidic. Carbohydrates decay in acidic bogs but are well-preserved in alkaline fens. Proteins dissolve in alkaline environments fens but last in acidic bogs. So it’s easier to find preserved animal material or fibers in bogs and preserved plant material or fibers in fens.